Kubernetes 설치 및 운영 101

사전 준비

Kubernetes 설치 전 서버 구성 변경

- 참고 : https://www.mirantis.com/blog/how-install-kubernetes-kubeadm/{:target="_blank"}

- Swap 영역을 비활성화

# 일시적인 설정

$ sudo swapoff -a

# 영구적인 설정, 아래 swap 파일 시스템을 주석처리

$ sudo vi /etc/fstab

...

# /dev/mapper/kube--master--vg-swap_1 none swap sw 0 0

- SELinux Disable

# 임시

$ sudo setenforce 0

# 영구

$ sudo vi /etc/sysconfig/selinux

...

SELinux=disabled

- 방화벽 Disable

$ sudo systemctl disable firewalld

$ sudo systemctl stop firewalld

- 브릿지 네트워크 할성화

# Centos

$ sudo vim /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

# Ubuntu

$ sudo vim /etc/ufw/sysctl.conf

net/bridge/bridge-nf-call-ip6tables = 1

net/bridge/bridge-nf-call-iptables = 1

net/bridge/bridge-nf-call-arptables = 1

Docker Install

- Centos Install : https://docs.docker.com/engine/install/centos/{:target="_blank"}

Cgroup 드라이버 이슈

- 최신 Kubernetes는 docker cgroup driver를 cgroupfs → systemd 변경 필요

- Master Init 및 Worker Join 시 WARNING 발생

- https://kubernetes.io/ko/docs/setup/production-environment/container-runtimes/{:target="_blank"}

kubeadm init --pod-network-cidr 10.244.0.0/16

...

[init] Using Kubernetes version: v1.19.3

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver.

The recommended driver is "systemd". ...

...

- 드라이버 변경 작업

- /etc/docker/daemon.json 파일 작성

$ cat <<EOF | sudo tee /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

EOF

# 도커 재시작

$ sudo systemctl restart docker

# 확인

$ sudo docker info | grep -i cgroup

Cgroup Driver: systemd

Kubernetes (kubeadm, kubelet, kubectl) 설치

- 참고 : https://kubernetes.io/docs/setup/independent/install-kubeadm/{:target="_blank"}

Kubernetes 설치 : Centos7 기준

- Docker 설치

sudo yum install -y docker

sudo systemctl enable docker && systemctl start docker

sudo usermod -aG docker $USER

- kubeadm, kubelet, kubectl : Repo 추가 및 패키지 설치

$ cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

$ sudo yum install -y kubelet kubeadm kubectl

$ sudo systemctl enable kubelet && systemctl start kubelet

# 버전이 안맞을 경우 지정

# sudo yum install kubelet-[version] kubeadm-[version] kubectl-[version]

- kubectl 자동완성

# sh

source <(kubectl completion sh)

echo "source <(kubectl completion sh)" >> ~/.shrc

# zsh

source <(kubectl completion zsh)

echo "if [ $commands[kubectl] ]; then source <(kubectl completion zsh); fi" >> ~/.zshrc

Master Node Init 및 Worker Node Join

Master Node 설정

- Master 초기화

- 네트워크 클래스 대역을 설정 필요 :

--pod-network-cidr 10.244.0.0/16

- 네트워크 클래스 대역을 설정 필요 :

sudo kubeadm init --pod-network-cidr 10.244.0.0/16

- Kubectl 사용 : To start using your cluster.. 아래 항목 3줄 실행

[init] Using Kubernetes version: v1.10.5

...

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

...

You can now join any number of machines by running the following on each node

as root:

kubeadm join 192.168.28.15:6443 --token 1ovd36.ft4mefr909iotg0a --discovery-token-ca-cert-hash sha256:82953a3ed178aa8c511792d0e21d9d3283e7575f3d3350a00bea3e34c2b87d29

- Pod 상태 확인

- coredns STATUS → Pending (∵ Overlay network 미설치)

$ kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-66bff467f8-ktvsz 0/1 Pending 0 19s

kube-system coredns-66bff467f8-nvvjz 0/1 Pending 0 19s

kube-system etcd-node1 1/1 Running 0 29s

kube-system kube-apiserver-node1 1/1 Running 0 29s

kube-system kube-controller-manager-node1 1/1 Running 0 29s

kube-system kube-proxy-s582x 1/1 Running 0 19s

kube-system kube-scheduler-node1 1/1 Running 0 29s

Overlay network : Calico 설치

- Overlay network 종류

- https://kubernetes.io/docs/concepts/cluster-administration/networking/{:target="_blank"}

- Install Calico for on-premises deployments

# Install Calico for on-premises deployments

$ kubectl apply -f https://docs.projectcalico.org/manifests/calico-typha.yaml

- coredns 서비스가 정상적으로 Running

$ kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-799fb94867-bcntz 0/1 CrashLoopBackOff 3 2m6s

kube-system calico-node-jtcmt 0/1 Running 1 2m7s

kube-system calico-typha-6bc9dd6468-x2hjj 0/1 Pending 0 2m6s

kube-system coredns-66bff467f8-ktvsz 0/1 Running 0 3m23s

kube-system coredns-66bff467f8-nvvjz 0/1 Running 0 3m23s

kube-system etcd-node1 1/1 Running 0 3m33s

kube-system kube-apiserver-node1 1/1 Running 0 3m33s

kube-system kube-controller-manager-node1 1/1 Running 0 3m33s

kube-system kube-proxy-s582x 1/1 Running 0 3m23s

kube-system kube-scheduler-node1 1/1 Running 0 3m33s

Worker Node 추가 (Join)

- Worker Node 실행

# Join 명령 가져오기

$ kubeadm token create --print-join-command

kubeadm join 192.168.28.15:6443 --token 1ovd36.ft4mefr909iotg0a --discovery-token-ca-cert-hash sha256:82953a3ed178aa8c511792d0e21d9d3283e7575f3d3350a00bea3e34c2b87d29

# Worker node 에서 실행

$ kubeadm join 192.168.28.15:6443 --token 1ovd36.ft4mefr909iotg0a --discovery-token-ca-cert-hash sha256:82953a3ed178aa8c511792d0e21d9d3283e7575f3d3350a00bea3e34c2b87d29

- 노드 상태 확인

> kubectl get node

NAME STATUS ROLES AGE VERSION

node1 Ready master 8m50s v1.18.6

node2 Ready <none> 16s v1.18.6

node3 Ready <none> 16s v1.18.6

서비스 배포 : 명령어(CLI) 기반

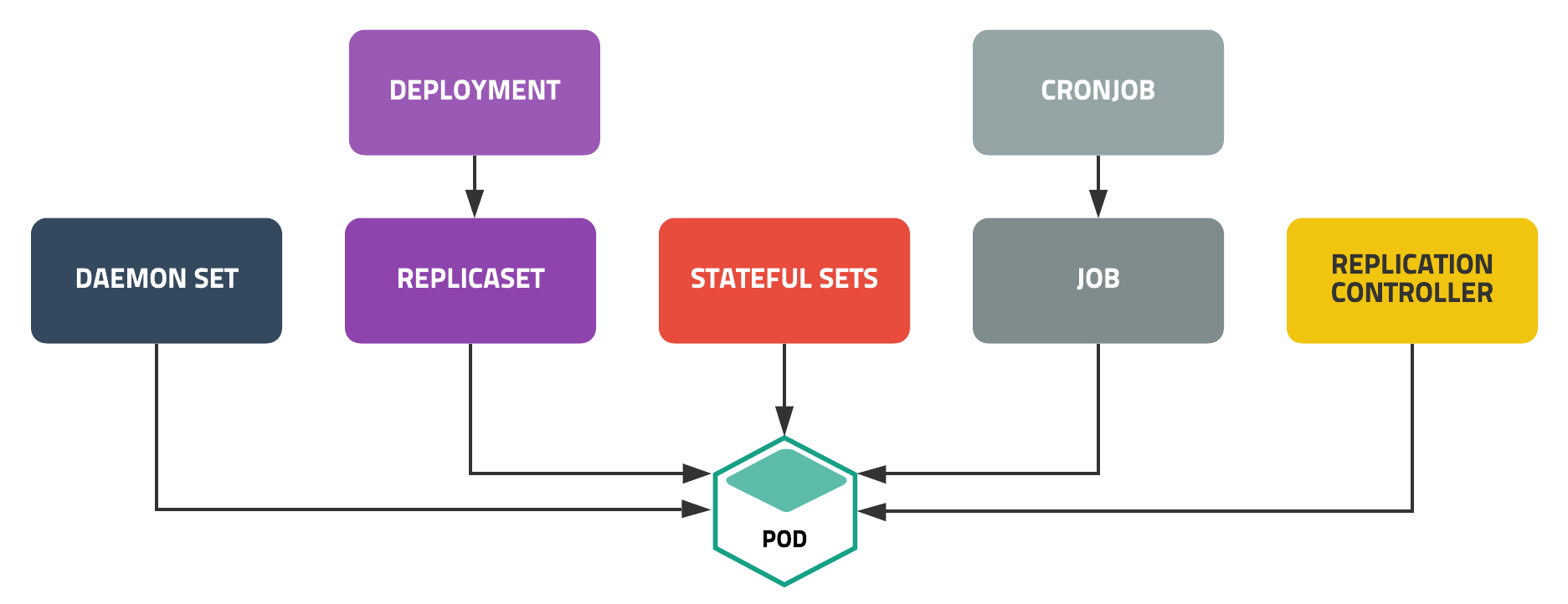

배포 / 서비스 추가 (Deployment/ReplicaSet)

- Docker 이미지를 빌드하여 Docker Hub에 업로드 : 서비스에는 Private Hub 구성 필요

- kubectl create deployment 명령으로 Pod, Deployment 생성

- kubectl expose 명령으로 Deployment 기준으로 서비스 생성

- Kubernetes NodePort vs LoadBalancer vs Ingress? When should I use what?

# pod 및 배포(deployment) 생성

$ kubectl create deployment mvcapp --image=cdecl/mvcapp:0.3

deployment.apps/mvcapp created

# 서비스 생성

$ kubectl expose deploy/mvcapp --type=NodePort --port=80 --name=mvcapp --target-port=80

service/mvcapp exposed

$ kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

mvcapp 1/1 1 1 24s

$ kubectl get po

NAME READY STATUS RESTARTS AGE

mvcapp-7b6b66bd55-g26wg 1/1 Running 0 34s

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mvcapp NodePort 10.107.145.24 <none> 80:31521/TCP 20s

# 서비스 확인 - Nodeport

$ curl localhost:31521

...

<div>Project: Mvcapp</div>

<div>Hostname: mvcapp-7b6b66bd55-g26wg</div>

<div>Request Count : 1</div>

<div>Version: 0.3</div>

...

Scale / 이미지 변경(배포)

- Scale(노드개수) 조정

$ kubectl scale deployment/mvcapp --replicas=4

deployment.apps/mvcapp scaled

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mvcapp-7b6b66bd55-4gppf 1/1 Running 0 78s 10.244.135.3 node3 <none> <none>

mvcapp-7b6b66bd55-4gssq 1/1 Running 0 78s 10.244.104.4 node2 <none> <none>

mvcapp-7b6b66bd55-4lqrt 1/1 Running 0 78s 10.244.135.2 node3 <none> <none>

mvcapp-7b6b66bd55-g26wg 1/1 Running 0 7m14s 10.244.104.3 node2 <none> <none>

- 이미지 변경 (버전업 배포) : 0.3 → 0.4

$ kubectl set image deployment/mvcapp mvcapp=cdecl/mvcapp:0.4

deployment.apps/mvcapp image updated

$ curl localhost:31521

...

<div>Project: Mvcapp</div>

<div>Hostname: mvcapp-78bbf7db4b-5fkdz</div>

<div>RemoteAddr: 10.244.166.128</div>

<div>X-Forwarded-For: </div>

<div>Request Count : 1</div>

<div>Version: 0.4</div>

...

$ kubectl rollout history deployment/mvcapp

deployment.apps/mvcapp

REVISION CHANGE-CAUSE

1 <none>

2 <none>

- 이전 버전으로 롤백

$ kubectl rollout undo deployment/mvcapp

deployment.apps/mvcapp rolled back

서비스 배포 : YAML 파일 기반

배포 / 서비스 추가

- 정책을 정의한 yaml 기반 정의

- NodePort 기반의 Deployment 및 서비스 정의

# mvcapp-deploy-service.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mvcapp

spec:

selector:

matchLabels:

app: mvcapp

replicas: 2 # --replicas=2 옵션과 동일

template: # create pods using pod definition

metadata:

labels:

app: mvcapp

spec:

containers:

- name: mvcapp

image: cdecl/mvcapp:0.3

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: mvcapp

spec:

type: NodePort

selector:

app: mvcapp

ports:

- port: 80

targetPort: 80

- yaml 파일 적용

# yaml 파일 적용

$ kubectl apply -f mvcapp-deploy-service.yaml

deployment.apps "mvcapp" created

service "mvcapp" created

$ kubectl get deploy -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

mvcapp 2/2 2 2 4h47m mvcapp cdecl/mvcapp:0.3 app=mvcapp

$ kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mvcapp-6b98dfc657-27zfr 1/1 Running 0 48m 10.244.135.18 node3 <none> <none>

mvcapp-6b98dfc657-p8hh4 1/1 Running 0 48m 10.244.104.27 node2 <none> <none>

$ kubectl k get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

mvcapp NodePort 10.106.102.27 <none> 80:30010/TCP 4h47m app=mvcapp

서비스 유형 : ClusterIP vs NodePort vs LoadBalancer

- https://medium.com/google-cloud/kubernetes-nodeport-vs-loadbalancer-vs-ingress-when-should-i-use-what-922f010849e0{:target="_blank"}

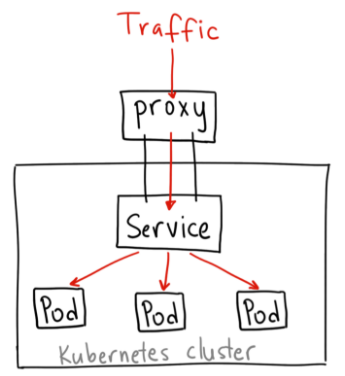

ClusterIP

- A ClusterIP service is the default Kubernetes service

- 클러스터내에서의 유효한 주소 및 Access 가능

- 외부 노출을 위해 별도의 Proxy 가 필요

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 2d18h

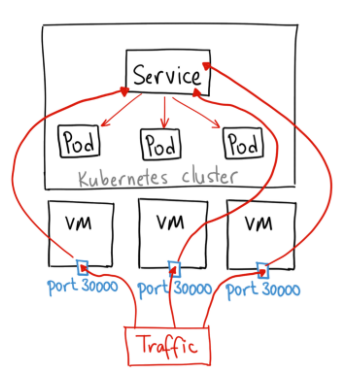

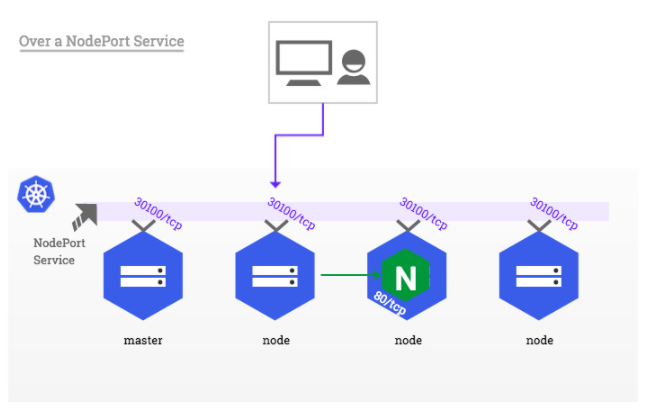

NodePort

- ClusterIP 기능 이외에 추가 기능이 있는 서비스 형태

- 노드 머신의 kube-proxy 통해 별도 30000-32767 사이의 포트로 서비스 노출

nodePort를 통해 지정가능 하며, 지정하지 않으면 랜덤 할당

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mvcapp NodePort 10.43.37.45 <none> 80:30010/TCP 2d18h

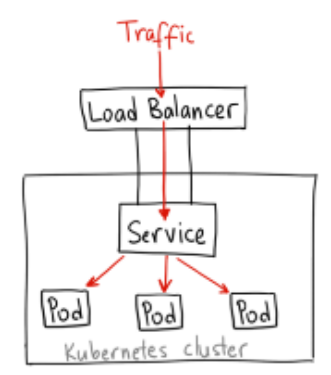

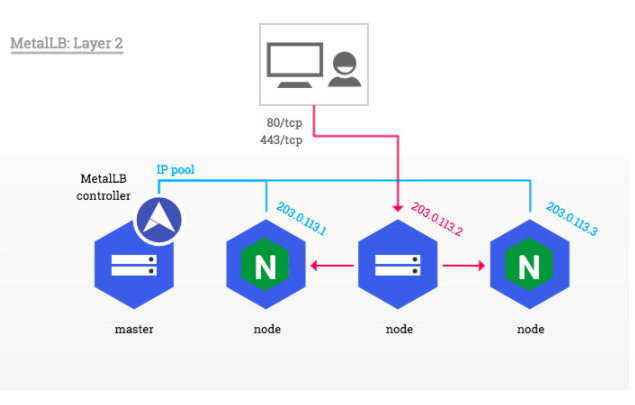

LoadBalancer

- LoadBalancer (L4/L7) 서비스를 통해 Worker Node 를 자동 등록하고 NodePort 를 통해 서비스 연결

- Cloud 서비스 등에서만 지원하며 서비스 노출하는 표준적인 방법

- Bare Metal 환경에서는 metallb

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mvcapp LoadBalancer 172.20.43.194 xxxxx-885321513.ap-northeast-2.elb.amazonaws.com 80:30291/TCP 10d

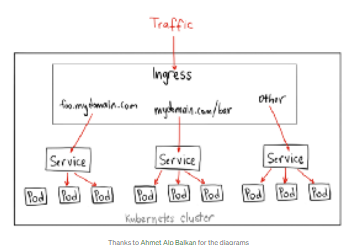

Ingress

- 서비스 유형은 아니며, L7 프로토콜을 통한 “스마트 라우터” 또는 진입점 역할하는 특별한 서비스

- 일반적인 L7 Layer Proxy 담당하는 kubernetes 서비스

$ kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

...

ingress-nginx default-http-backend-65dd5949d9-mlr9f 1/1 Running 0 2d19h

ingress-nginx nginx-ingress-controller-q4j6b 1/1 Running 0 2d19h

ingress-nginx nginx-ingress-controller-rngt9 1/1 Running 0 2d19h

...

$ kubectl get ing

NAME CLASS HOSTS ADDRESS PORTS AGE

eks-ingress <none> * xxxxx-1951376961.ap-northeast-2.elb.amazonaws.com 80 9d

Kubernetes DNS

- 클러스터내에 유효한 이름 규칙 및 DNS 서비스 조회를 담당

CoreDNSKubernetes 1.15 클러스터 지원되며 Kubernetes 이하 버전의 클러스터는kube-dns를 기본 DNS 공급자로 사용

$ kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

...

kube-system coredns-6f85d5fb88-njg26 1/1 Running 0 2d19h

kube-system coredns-6f85d5fb88-ns99n 1/1 Running 0 2d18h

kube-system coredns-autoscaler-79599b9dc6-gtpzq 1/1 Running 0 2d19h

$ kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-system kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 2d19h

Lookup 규칙

- https://kubernetes.io/ko/docs/concepts/services-networking/dns-pod-service/{:target="_blank"}

- Service:

[service-name].[namespace].svc.cluster.local

$ nslookup

> lserver 10.43.0.10

Default server: 10.43.0.10

Address: 10.43.0.10#53

> mvcapp.default.svc.cluster.local

Server: 10.43.0.10

Address: 10.43.0.10#53

Name: mvcapp.default.svc.cluster.local

Address: 10.43.37.45

Pod 내부 세팅 : /etc/resolv.conf

- 해당 Pod 에 해당하는 이름 규칙의 질의 내용 표시

default.svc.cluster.localsvc.cluster.localcluster.local

$ kubectl exec -it mvcapp-6cc9667f94-ljvjx -- /bin/bash

root@mvcapp-6cc9667f94-ljvjx:/app$ cat /etc/resolv.conf

nameserver 10.43.0.10

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

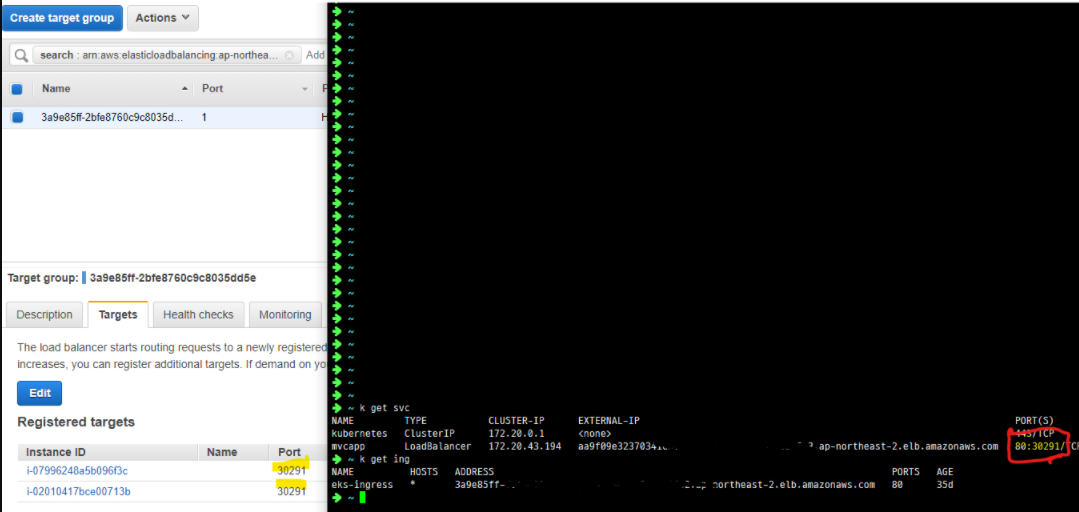

서비스 노출 (AWS)

AWS

- type: LoadBalancer : 서비스 타입을 LoadBalancer 지정하면 EXTERNAL-IP 자동으로 할당

- Ingress 활용 : annotations 을 통해 alb 할당

- EKS 클러스터 생성 및 Ingress

apiVersion: v1

kind: Service

metadata:

name: mvcapp

spec:

type: LoadBalancer # ←

selector:

app: mvcapp

ports:

- port: 80

targetPort: 80

$ kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

mvcapp LoadBalancer 172.20.9.13 xx-651061089.ap-northeast-2.elb.amazonaws.com 80:30731/TCP 30d app=mvcapp

AWS Ingress

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: eks-ingress

annotations:

kubernetes.io/ingress.class: alb

#alb.ingress.kubernetes.io/target-type: ip # ip, instance

alb.ingress.kubernetes.io/scheme: internet-facing # internal, internet-facing

spec:

rules:

- host: mvcapp.cdecl.net

http:

paths:

- backend:

serviceName: mvcapp

servicePort: 80

$ kubectl get ing -o wide

NAME HOSTS ADDRESS PORTS AGE

eks-ingress * xx-default-eksingres-ea83.ap-northeast-2.elb.amazonaws.com 80 30d

서비스 노출 (Bare Metal)

- Bare-metal considerations : https://kubernetes.github.io/ingress-nginx/deploy/baremetal/{:target="_blank"}

- 쿠버네티스 네트워킹 이해하기 : https://coffeewhale.com/k8s/network/2019/05/30/k8s-network-03/{:target="_blank"}

MetalLB 활용

- memberlist 기반 ARP

(IPv4)/NDP(IPv6)활용 LB 리더 역할 관리 - metallb vs keepalibed : https://metallb.universe.tf/concepts/layer2/#comparison-to-keepalived{:target="_blank"}

- Keepalived uses the Virtual Router Redundancy Pro

- MetalLB on the other hand relies on memberlist to know when a Node in the cluster is no longer reachable and the service IPs from that node should be moved elsewhere.

- 설치 : https://metallb.universe.tf/installation/#installation-by-manifest{:target="_blank"}

# On first install only

$ kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)"

- ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

pro

addresses:

- 192.168.28.100-192.168.28.103

- 서비스의 type: LoadBalancer 지정하면 addresses 범위 내 할당 (지정가능: loadBalancerIP)

apiVersion: v1

kind: Service

metadata:

name: mvcapp

spec:

type: LoadBalancer

#loadBalancerIP: 192.168.28.100

selector:

app: mvcapp

ports:

- port: 80

targetPort: 80

$ curl 192.168.28.100

NodePort : Over a NodePort Service

- Nodeport 자동(수동)으로 할당한 30000-32767 over port 활용

apiVersion: v1

kind: Service

metadata:

name: mvcapp

spec:

type: NodePort

selector:

app: mvcapp

ports:

- port: 80

targetPort: 80

# nodePort: 30010 # 포트 임의지정

# PORT(S) 확인

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mvcapp NodePort 10.106.102.27 <none> 80:30010/TCP 128m

# Master 및 Worker IP

$ curl localhost:30010

$ curl 192.168.28.16:30010

External IPs

- 일반적으로 권고하지 않음

- 서비스에 externalIPs 를 설정하여 서비스 노출

spec:

externalIPs:

- 192.168.28.15

- 192.168.28.16

- 192.168.28.17

$ netstat -an | grep 'LISTEN '

tcp 0 0 192.168.28.15:80 0.0.0.0:* LISTEN

$ ansible all -m shell -a "netstat -an | grep 'LISTEN ' | grep ':80' "

node3 | CHANGED | rc=0 >>

tcp 0 0 192.168.28.17:80 0.0.0.0:* LISTEN

node1 | CHANGED | rc=0 >>

tcp 0 0 192.168.28.15:80 0.0.0.0:* LISTEN

node2 | CHANGED | rc=0 >>

tcp 0 0 192.168.28.16:80 0.0.0.0:* LISTEN

Ingress controller

- 외부 서비스 접속을 위한 도메인, URL 기반 서비스 분기 역할

L7 레이어 기능 - RBAC 기반 설치 : 역할 기반 접근 제어(role-based access control)

- 그중 가장 많이 활성화 된 nginx 기반 Ingress 컨트롤러 이용

- ingress-nginx repository : https://github.com/kubernetes/ingress-nginx{:target="_blank"}

- Installation Guide : https://github.com/kubernetes/ingress-nginx/blob/master/docs/deploy/index.md{:target="_blank"}

# Bare-metal Using NodePort:

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

deployment.apps/ingress-nginx-controller created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

serviceaccount/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

- Ingress Rule 적용

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: main-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

# - host: mvcapp.cdecl.net

- http:

paths:

- backend:

serviceName: mvcapp

servicePort: 80

- Ingress 확인

$ kubectl get ing

NAME CLASS HOSTS ADDRESS PORTS AGE

main-ingress <none> * 192.168.28.16 80 25s

기타 Network 분석

Route

- Route table 기반으로 Pod 이 존재하는 Gateway로 보내는 구조

- ① 접속하는 Node에 존재하는 Pod 은 Gateway를

0.0.0.0해당 Node 처리 - ② 다른 Node에 존재하는 Pod 은 해당 대역대의 서버(Gateway) 보내어 ①과 같은 과정으로 처리 후 리턴

AWS EKS LoadBalancer

- ALB/NLB Target Group의 NodePort I/F 를 통해 서비스 접속

고가용성 토폴로지 (HA) 구성

- https://kubernetes.io/ko/docs/setup/production-environment/tools/kubeadm/ha-topology/{:target="_blank"}

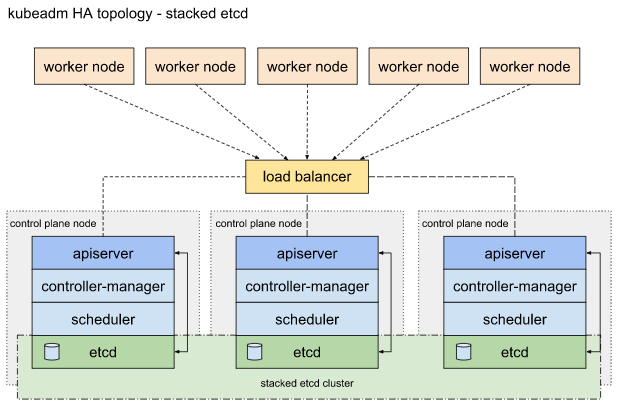

중첩된 etcd 토플로지 (Stacked etcd)

- 컨트롤 플레인(–control-plane) 구성 요소를 실행하는 kubeadm 관리되는 노드 추가

Master Node 설정

- Single Master 구성에서 옵션 추가 필요

- kube-apiserver 를 위한 load balancer 구성 필요

--control-plane-endpoint "LOAD_BALANCER_IP:PORT"--upload-certs: 인증서를 업로드 (자동배포)

# kube01

$ kubeadm init --pod-network-cidr 10.244.0.0/16 --control-plane-endpoint "10.239.36.184:6443" --upload-certs

...

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

...

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 10.239.36.184:6443 --token 62f4ot.y6c026tnpxmanqrk \

--discovery-token-ca-cert-hash sha256:5c7b19f57085d582d216681924a869a3fa66dd042f55fdfd3c7ac32115f01c18 \

--control-plane --certificate-key 11f38363a6f08d6d89e8b37a00599a0ffbd9a5210d86b9709ffed7249af23716

...

kubeadm join 10.239.36.184:6443 --token 62f4ot.y6c026tnpxmanqrk \

--discovery-token-ca-cert-hash sha256:5c7b19f57085d582d216681924a869a3fa66dd042f55fdfd3c7ac32115f01c18

- control-plane join 명렁어 추가됨

# kube04

$ kubeadm join 10.239.36.184:6443 --token 62f4ot.y6c026tnpxmanqrk \

--discovery-token-ca-cert-hash sha256:5c7b19f57085d582d216681924a869a3fa66dd042f55fdfd3c7ac32115f01c18 \

--control-plane --certificate-key 11f38363a6f08d6d89e8b37a00599a0ffbd9a5210d86b9709ffed7249af23716

$ kubectl get node

NAME STATUS ROLES AGE VERSION

kube01 Ready master 8m58s v1.19.3

kube02 Ready <none> 5m v1.19.3

kube03 Ready <none> 4m50s v1.19.3

kube04 Ready master 2m7s v1.19.3

HA에서 control plane 노드 제거

- 제거 할 노드에서 reset 명령 실행

# kube04

$ sudo kubeadm reset

- kubectl delete node로 control plane노드 를 제거하지 않도록 한다.

- 만약 kubectl delete node로 삭제하는 경우 이후 추가 되는 control plane 노드들은 HA에 추가 될수 없다.

- 그 이유는 kubeadm reset은 HA내 다른 control plane 노드의 etcd에서 etcd endpoint 정보를 지우지만 kubectl delete node는 HA 내의 다른 controle plane 노드에서 etcd endpoint 정보를 지우지 못하기 때문이다.

- 이로 인해 이후 HA에 추가되는 control plane 노드는 삭제된 노드의 etcd endpoint 접속이 되지 않기 때문에 etcd 유효성 검사 과정에서 오류가 발생하게 된다.

- 참고 : https://fliedcat.tistory.com/170{:target="_blank"}

Nginx 정보 (참고)

- Load balancer 구성을 위한 reverse proxy 세팅

# /etc/nginx/nginx.conf

stream {

upstream kube {

server kube01:6443;

server kube04:6443;

}

server {

listen 6443;

proxy_pass kube;

}

}

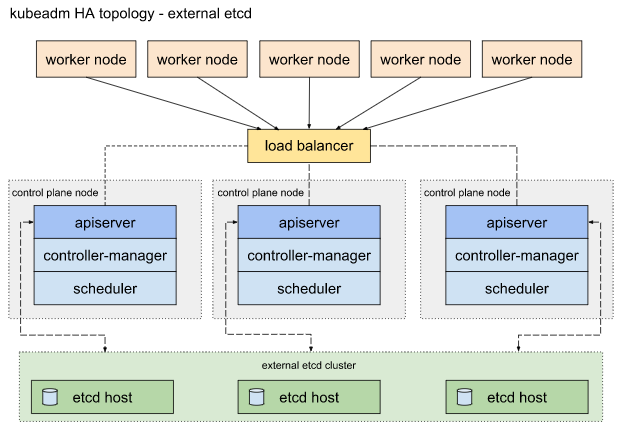

외부 etcd 토플로지 (External etcd)

- 별도의 서버를 통해 etcd 서비스를 분산하는 방법

- 이 토플로지는 중첩된 토플로지에 비해 호스트 개수가 두배나 필요하다. 이 토플로지로 HA 클러스터를 구성하기 위해서는 최소한 3개의 컨트롤 플레인과 3개의 etcd 노드가 필요하다.

Kubernetes 초기화

$ sudo kubeadm reset -f